Mastering AI Dev: The Rise of the Context Engineer

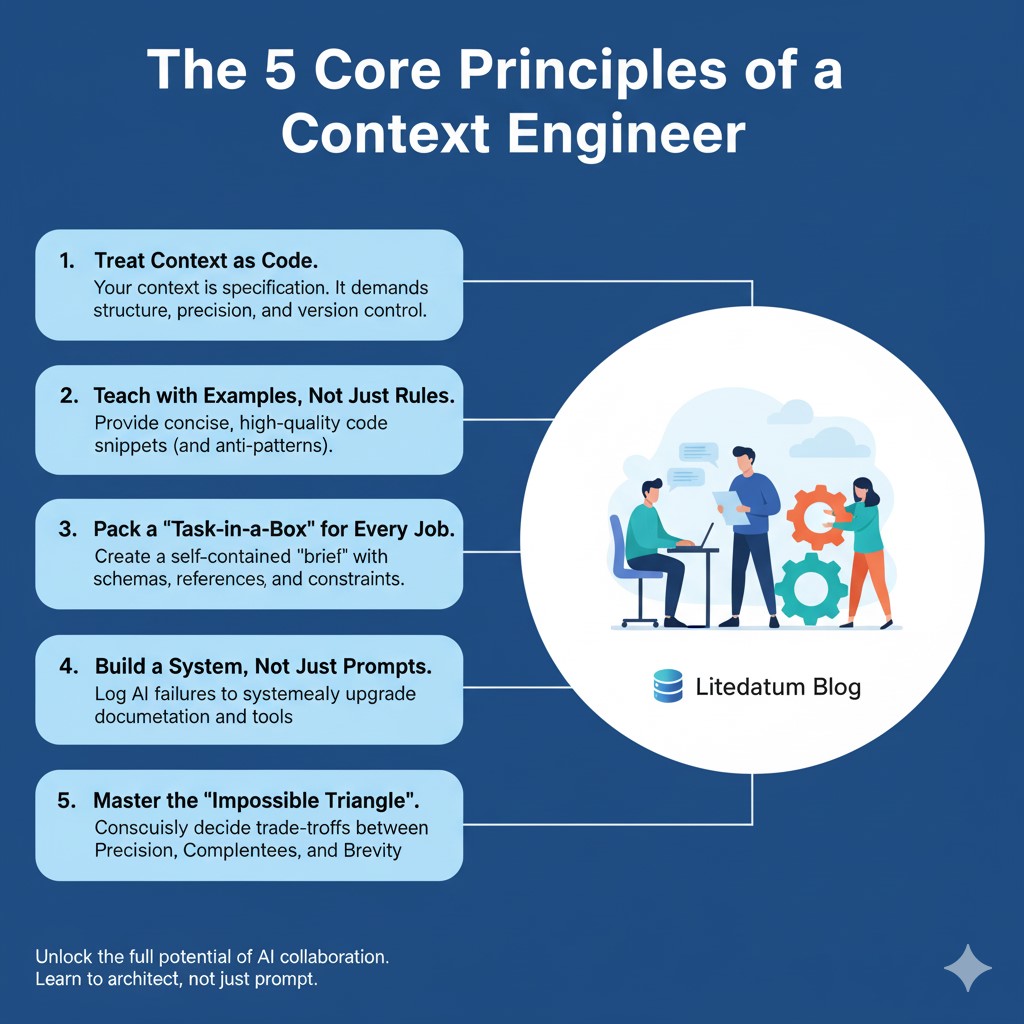

Stop wrestling with prompts. Become a Context Engineer. Learn how to design information environments that empower AI to build exactly what you intend. Master the new best practice.

Mastering AI Dev: The Rise of the Context Engineer

We’re at a peculiar inflection point. We marvel at an AI’s power to generate code, yet we lament its inability to truly grasp our instructions. We spend hours writing detailed documentation and crafting meticulous prompts, hoping the AI will intuit our needs like a seasoned colleague. Instead, we often get code that is plausible in appearance but fundamentally flawed in practice.

What’s going wrong? Perhaps the problem lies in the words we use. We think we’re “writing documentation,” but what we should be doing is “Context Engineering.”

This is more than a trendy new term. It’s a fundamental shift in mindset: from a one-way “information provider” to a two-way “cognitive architect.” Our job is no longer to simply tell the AI what to do, but to design an information environment where it can accurately deduce how to do it.

This article will guide you through this paradigm shift. We’ll start with first principles, exploring the underlying mechanics and physical limits of context. We’ll then make these abstract theories concrete with a carefully chosen case study: a Python data quality rules engine. Finally, we’ll equip you with a practical guide of techniques you can implement immediately. By the end, you’ll understand that the ultimate best practice isn’t a fixed set of tricks, but an engineering mindset focused on continuous iteration and closing the gap between intent and outcome.

Chapter 1: First Principles — The Deep Mechanics of Context

Before diving into specific techniques, we must answer a foundational question: Why is preparing context for an AI such a demanding task in the first place?

From “Information” to “Constraints”: The True Nature of Context

What we provide to an AI may look like “information,” but its true essence is a collection of decision-making constraints. An AI doesn’t “read” your documentation; it navigates the information space you provide to construct the most probable path from a given task to a desired answer.

Therefore, documentation is only “useful” to the extent that the AI can extract executable constraints from it.

- A style guide becomes a set of syntactical constraints on the generated code.

- An API reference is parsed into structural patterns for function calls.

- Business logic is mapped into the branches of a decision tree within the code.

The Cognitive Interface: Speaking the AI’s Native Language

An AI’s ability to “understand” is rooted in pattern matching and sequence prediction. This means the most effective context isn’t the most comprehensive, but the one that best matches its internal cognitive model.

- Structure Aligns with Attention: A clearly layered document helps the AI allocate its limited attention to locate critical information quickly.

- Paradigms Overlap with Training Data: Using industry-standard paradigms (like Pydantic in Python) allows the AI to generalize and apply knowledge learned from its vast training data.

- Constraints Translate to Token Probabilities: An explicit rule (e.g., “Class names must end with

Rule”) is far more effective at guiding the AI’s output than a vague description (e.g., “Use meaningful class names”).

The Limits of Information Theory: The Inescapable Trade-off Triangle

All context engineering is, at its core, an exercise in information compression, transmission, and decompression.

Our goal is to minimize information loss throughout this process. But this forces us into an inescapable trade-off among three competing virtues:

You cannot maximize all three within a finite token budget. If context is too brief, the AI will hallucinate due to a lack of constraints. If it’s too complete and verbose, critical signals will be diluted by noise, causing the AI’s attention to drift.

A “best practice” is simply the optimal balance point on this triangle for a specific task. The root of this challenge is the physical limit of computation: the O(n²) complexity of attention mechanisms and the fundamental conflict between the infinite dimensionality of human intent and the discrete nature of tokens.

Context engineering is the art of using a finite set of discrete symbols, within a finite computational budget, to approximate an infinite-dimensional intent space.

Chapter 2: The Perfect Illustration — Why a Python Rules Engine?

Abstract theory provides clarity, but only a concrete example can truly internalize a lesson. To make the principles above tangible, we’ve chosen a niche yet perfectly illustrative case study: a Python data quality rules engine.

We chose this not because you need to build one, but because its very nature exposes and magnifies all the core challenges of context engineering.

Challenge 1: The Tension Between Abstract and Concrete

A rules engine’s architecture is typically highly abstract (e.g., BaseRule, RuleResult), but the business rules it implements are infinitely concrete (phone number formats, order value ranges, etc.).

This is a perfect mirror of the context engineering dilemma: the AI must both understand your abstract framework and generate code that fulfills specific business needs. It is a living example of “using finite symbols to approximate an infinite intent space.”

Challenge 2: The Conflict Between Reusability and Customization

A rules engine demands that every rule adheres to a uniform interface (for reusability), yet each rule contains unique, custom business logic.

This poses a significant challenge for documentation, which must be both generic and specific. Unlike a relatively standalone React component, every rule in the engine is tightly coupled to the core architecture, demanding that the context be layered with extreme precision.

The Value: An Ideal “Probe” for AI’s Weaknesses

Because a rules engine is a highly custom layer of abstraction, it’s a fantastic litmus test for the limits of an AI’s pattern-matching capabilities. If your BaseRule design deviates from common open-source frameworks, the AI is highly likely to draw upon its training data and generate code that looks right but fails to integrate with your system.

Developing the engine also exposes the physical limits of information compression. A moderately complex rule can require nearly a thousand tokens of context (architectural layers, business context, implementation details). This forces us to make hard choices about which information is truly critical—a process that, in itself, builds a deeper understanding of the task.

Chapter 3: The Practitioner’s Guide — 8 Core Techniques for Engineering Context

Let’s now use our Python rules engine case study to translate theory into a set of actionable techniques.

Technique 1: Structure Your Context — Architecture First, Constraints Upfront

An AI weighs the beginning of your context most heavily. Always place your most critical, non-negotiable “core constraints” and “architectural overview” at the very top.

Example:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

# Data Quality Rules Engine Development Spec

## Core Constraints (Required Reading)

- Python 3.10+ with strict type hints (enforced by mypy).

- All rules MUST inherit from the `BaseRule` abstract class.

- All data inputs MUST be validated by a Pydantic model first.

- Rule execution MUST return a `RuleResult` object.

## Architectural Overview

Engine Pipeline: Input Data → Schema Validator → Rule Engine → Result Aggregator → Output

Core Components:

- rules/ # Rule definitions (grouped by data domain)

- engine/ # Execution engine

- models/ # Data models (Pydantic)

...

This ensures the AI loads the project’s “axioms” and “constitution” before it writes a single line of code.

Technique 2: Prioritize Constraints — Use Schemas, Not Sentences

An AI’s comprehension of structured data (like code or JSON Schema) is far superior to its understanding of natural language. It’s closer to its native tongue. Instead of describing an object in a paragraph, give it the Pydantic model.

Example: Define clear data inputs and outputs with Pydantic. This allows the AI to understand data formats, types, and constraints with perfect precision.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

# models/schemas.py

"""

All data model definitions.

AI must reference this file when developing rules.

"""

from pydantic import BaseModel, Field

from typing import Optional

from datetime import datetime

class DataRecord(BaseModel):

"""The standard data record for the rules engine input."""

id: str = Field(..., description="Unique record identifier")

source: str = Field(..., description="Data source")

fields: dict = Field(..., description="Key-value pairs of fields")

class RuleResult(BaseModel):

"""The standard output for a rule execution."""

passed: bool = Field(..., description="True if the rule passed")

error_code: Optional[str] = Field(None, description="Error code if failed")

message: str = Field(..., description="Description of the result")

In your prompt, you can now reference these directly: “The function takes an input conforming to models/schemas.py::DataRecord and must return a models/schemas.py::RuleResult object.” This method is information-dense and completely unambiguous.

Technique 3: Templatize Your Context — Create Task-Specific Briefs

Don’t expect an AI to find what it needs in a 50-page document. For each common development task, create a condensed “Task Brief” that pre-packages all relevant context.

Example: Task Brief for a “Phone Number Format” Rule

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

# TASK: Implement "Phone Number Format Validation" Rule

## Current Context

- File Location: `rules/contact/phone_validation_rule.py`

- Data Model: `models/contact.py::ContactInfo`

## Requirements

1. Inherit from `BaseRule` and implement the `validate()` method.

2. On failure, return `RuleResult(passed=False, error_code="INVALID_PHONE", ...)`.

3. Use the `phonenumbers` library; do not hardcode regex.

## References

- Similar rule: `rules/contact/email_validation_rule.py`

- Base class API: `docs/api/base_rule.md`

## Expected Output

```python

# The following test case must pass

assert PhoneValidationRule().validate(ContactInfo(phone="13800138000")).passed == True

```

This brief acts as a “starter kit” for the task, drastically reducing the AI’s cognitive load.

Technique 4: Build a Code Snippet Library — Provide Few-Shot Examples

AIs excel at learning from examples. Create a “pattern library” in your documentation that includes best practices, common patterns, and anti-patterns to avoid. This is a shortcut to guiding the AI toward high-quality code.

Example:

In docs/examples/rule_patterns.md, provide templates for standard rules, rules that need to access external data, composite rules, etc., and explicitly list anti-patterns.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

# ✅ Standard Rule Template

class MyCustomRule(BaseRule):

def validate(self, data: MyDataModel, context: Dict = None) -> RuleResult:

# ... clean implementation ...

# ❌ Anti-Patterns to Avoid

# Anti-Pattern 1: Using print for debugging

def validate(self, data):

print(f"Checking {data}") # ❌ Incorrect. Use logger.debug()```

This serves as both a textbook for the AI and a unified style guide for the human team.

### Technique 5: Partition Your Context — Supply Information in Layers

Structure your documentation by domain: "Core Architecture (must-read)," "Domain Knowledge (read if relevant)," and "Reference Implementations (optional)." This layered structure aligns perfectly with an AI's attention mechanism and human cognitive workflows.

**Example:**

/docs /core # Core architecture, required for all tasks - architecture.md - base_rule_api.md /domains # Grouped by data domain - financial/ - customer/ /examples # Reference implementations - rule_patterns.md

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

In a Task Brief, you can now provide precise instructions: "You must read all docs in `/docs/core/`. Refer to implementations in `/docs/domains/customer/` and `/rules/customer/`."

### Technique 6: Use Incremental Context — Refine Complex Needs Through Dialogue

For complex tasks, don't try to provide everything in the first prompt. Use a conversational, incremental approach. Have the AI generate a scaffold first, then progressively add constraints and details.

**Example: Developing an "Anomalous Order Value" Rule**

1. **Round 1:** Provide the core requirement to generate the basic class structure.

2. **Round 2:** Add the logic for querying historical data, including a sample SQL query.

3. **Round 3:** Introduce performance constraints, such as the need for a caching mechanism.

This process mimics human pair programming, breaking a large problem into smaller, manageable steps and converging on the final solution.

### Technique 7: Automate with Dynamic Context — Use Tools to Generate Context

Drastically improve efficiency and accuracy by using scripts to automatically generate your context. A script can scan the file tree, pull in relevant data models, and find existing rules in the same domain to create a comprehensive prompt.

**Example: A `rule_generator.py` Tool**

Running `python tools/rule_generator.py --domain customer --rule-name id-card-validation` could automatically create the boilerplate file and generate a Markdown document containing all the necessary context. You simply copy this into your prompt.

### Technique 8: Create a Feedback Loop — Learn and Evolve from AI Failures

Log the AI's failures. Analyze why it made a mistake, then update your documentation, code templates, and examples to prevent it from happening again. This isn't just "debugging"; it's the "evolution" of your context system.

**Example: An `ai_feedback.md` Log**

```markdown

# AI Development Issues & Resolutions (Continuously Updated)

### Issue 1: AI frequently forgets to handle NULL values.

**Symptom:** Generated rules throw an exception when input data is missing.

**Resolution:** Added a mandatory "NULL Value Handling" pattern to `rule_patterns.md`.

**Effective Date:** 2025-11-01

---

This document itself becomes a living record of your context engineering system’s evolution.

The Final Answer: The Future of Context Engineering

Let’s return to our original question: What is the best practice for AI-assisted development?

- The Surface Answer: It’s the eight techniques listed above.

- The Deeper Answer: It’s a commitment to three principles—identifying the “axioms” of your project, communicating in the AI’s “native language,” and embracing imperfect iteration.

- The Fundamental Answer: Context engineering is the act of transmitting your mental model of intent across a limited channel, using the receiver’s (the AI’s) decoding mechanisms.

This reveals the most profound truth of all: There is no such thing as a universal “best practice.”

There are only local optima—the best solutions for a specific task, with a specific model, under specific context window limitations.

The true “best practice,” therefore, is to acknowledge and embrace this locality. It is to build a system that allows for continuous iteration and optimization. This is more than a technique; it is a philosophy—a way of thinking, adapting, and co-evolving in the new paradigm of human-AI collaboration.