The Five Pillars of Data Truth: How Quality Metrics Solve Report Mismatches

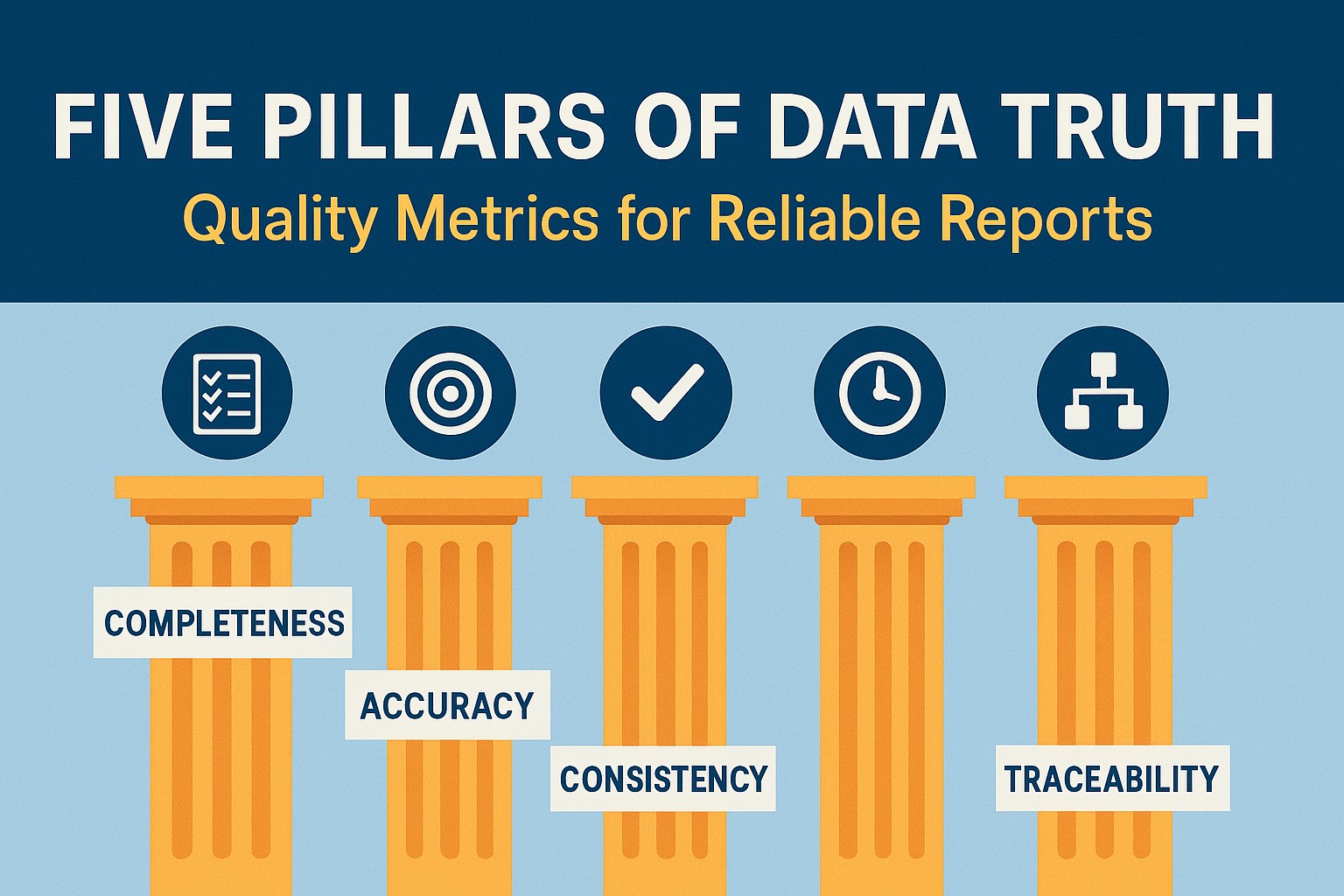

Discover the five critical dimensions of data quality that transform chaotic reports into trustworthy insights. Learn how to measure and monitor completeness, accuracy, consistency, freshness, and traceability.

3:47 AM. Another Slack notification pierces the silence.

“Sales numbers don’t match again. Finance shows $2.3M, ops dashboard says $2.1M. Can we get this fixed before the board meeting?”

Sound familiar? You’re staring at your laptop screen, coffee growing cold, wondering how two systems pulling from the same source can tell entirely different stories. The sales team suspects missing orders. Finance points fingers at currency conversion rates. Operations questions the aggregation logic. Meanwhile, the data team scrambles to play detective without a proper crime scene investigation toolkit.

This scenario repeats itself in organizations worldwide, every single day. But here’s the thing most data practitioners don’t realize: these mismatches aren’t random acts of digital chaos. They’re symptoms of a deeper architectural truth that we’ve collectively overlooked.

The single, irreducible principle behind every high-functioning data organization is this: trust is a measurable construct, not an aspirational state.

Trust doesn’t emerge from good intentions or elegant pipelines. It crystallizes from systematic measurement across five critical dimensions. When these metrics align, reports converge. When they drift, numbers diverge like tectonic plates, leaving everyone standing on shaky ground.

Let’s dissect each pillar with the precision of a forensic pathologist examining evidence.

Pillar One: Completeness - The Foundation of Trust

Completeness answers the fundamental question: Is all the data that should be there actually there?

This isn’t just about NULL values, though they matter. Completeness operates across three dimensions: rows, columns, and partitions. Missing rows mean lost transactions. Missing columns suggest schema drift. Missing partitions indicate systematic failures in your ingestion pipeline.

The most insidious completeness failures happen gradually. Monday’s batch processes 98% of expected records. Tuesday drops to 97%. By Friday, you’re missing 5% of your revenue data, but nobody notices because each daily delta feels negligible.

1

2

3

4

5

6

7

8

9

10

11

-- Row count baseline monitoring

SELECT

DATE(created_at) as batch_date,

COUNT(*) as daily_count,

LAG(COUNT(*)) OVER (ORDER BY DATE(created_at)) as prev_count,

(COUNT(*) - LAG(COUNT(*)) OVER (ORDER BY DATE(created_at))) /

NULLIF(LAG(COUNT(*)) OVER (ORDER BY DATE(created_at)), 0) * 100 as pct_change

FROM orders

WHERE created_at >= CURRENT_DATE - INTERVAL '7 days'

GROUP BY DATE(created_at)

ORDER BY batch_date;

Smart teams implement completeness checks at three levels:

Partition-level monitoring

Catches wholesale data loss. If your daily partition contains 10,000 fewer records than yesterday without a corresponding business explanation, something broke upstream.

Column-level density tracking

Reveals schema evolution. When a critical field suddenly shows 30% NULL values instead of the historical 2%, you’ve likely encountered an API change or source system modification.

Cross-system reconciliation

Exposes integration gaps. Your CRM says 1,847 new customers this month. Your data warehouse counts 1,803. Those 44 missing records might represent $50K in annual recurring revenue.

Tools like dbt make partition monitoring straightforward:

1

2

3

4

5

6

7

8

9

models:

- name: daily_orders

tests:

- dbt_utils.equal_rowcount:

compare_model: ref('daily_orders_source')

- dbt_utils.recency:

datepart: day

field: created_at

interval: 1

But here’s what the documentation won’t tell you: completeness monitoring only works if you establish baselines during stable periods. Running these tests during Black Friday or end-of-quarter spikes will generate false positives that train your team to ignore alerts.

The real art lies in building adaptive thresholds that account for business seasonality while remaining sensitive to genuine data loss.

Pillar Two: Accuracy - The Precision Instrument

Accuracy measures how closely your data reflects reality. But here’s the paradox: in data systems, reality is often unknowable. We proxy truth through business rules, referential integrity, and statistical bounds.

The most dangerous accuracy failures masquerade as reasonable values. A customer age of 150 years will trigger alerts. A customer age of 35 when the real age is 53? That slips through, corrupting lifetime value calculations and segmentation models for months.

Accuracy validation operates across multiple dimensions:

Domain validation

Ensures values fall within acceptable ranges:

1

2

3

4

5

6

7

# Great Expectations example

import great_expectations as ge

df = ge.from_pandas(orders_df)

df.expect_column_values_to_be_between("order_amount", min_value=0, max_value=100000)

df.expect_column_values_to_match_regex("email", r'^[^@]+@[^@]+\.[^@]+$')

df.expect_column_values_to_not_be_null("order_id")

Cross-field consistency

Catches logical contradictions:

1

2

3

4

5

-- Flag orders where shipping cost exceeds order value

SELECT order_id, order_amount, shipping_cost

FROM orders

WHERE shipping_cost > order_amount * 0.5

AND order_amount > 0;

Temporal accuracy

Validates time-series relationships:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

# Pandera schema with temporal constraints

import pandera as pa

schema = pa.DataFrameSchema({

"order_date": pa.Column(pa.DateTime, checks=[

pa.Check.greater_than_or_equal_to(pd.Timestamp("2020-01-01")),

pa.Check.less_than_or_equal_to(pd.Timestamp.now())

]),

"ship_date": pa.Column(pa.DateTime, nullable=True),

})

# Custom check: ship_date must be after order_date

@pa.check("ship_date")

def ship_after_order(ship_date, order_date):

return ship_date.isna() | (ship_date >= order_date)

The subtle insight most practitioners miss: accuracy requirements vary by use case. Financial reporting demands different precision than marketing analytics. A 2% variance in customer acquisition cost might be acceptable for campaign optimization but catastrophic for investor reporting.

Build accuracy checks that reflect your data’s ultimate purpose, not just its immediate structure.

Pillar Three: Consistency - The Harmony Principle

Consistency ensures that identical concepts produce identical results across different systems, time periods, and processing methods. It’s the difference between a symphony and a cacophony.

Most consistency failures emerge from semantic drift. The “customer” entity in your CRM includes prospects. The “customer” table in your data warehouse only includes paying users. Both definitions seem reasonable in isolation, but they create impossible reconciliation puzzles downstream.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

-- Multi-source consistency check

WITH crm_summary AS (

SELECT

DATE_TRUNC('month', created_date) as month,

COUNT(*) as crm_customers,

SUM(lifetime_value) as crm_ltv

FROM crm_customers

WHERE status = 'active'

GROUP BY 1

),

warehouse_summary AS (

SELECT

DATE_TRUNC('month', first_order_date) as month,

COUNT(DISTINCT customer_id) as warehouse_customers,

SUM(total_spent) as warehouse_ltv

FROM dim_customers

GROUP BY 1

)

SELECT

COALESCE(c.month, w.month) as month,

c.crm_customers,

w.warehouse_customers,

ABS(c.crm_customers - w.warehouse_customers) as customer_diff,

c.crm_ltv,

w.warehouse_ltv,

ABS(c.crm_ltv - w.warehouse_ltv) as ltv_diff

FROM crm_summary c

FULL OUTER JOIN warehouse_summary w ON c.month = w.month

WHERE ABS(c.crm_customers - w.warehouse_customers) > 5

OR ABS(c.crm_ltv - w.warehouse_ltv) > 1000;

Smart consistency monitoring uses aggregation hashes to detect drift without exposing raw data:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

# Generate consistency fingerprints

import hashlib

import pandas as pd

def generate_consistency_hash(df, group_cols, value_cols):

"""Generate reproducible hash for data consistency checking"""

summary = df.groupby(group_cols)[value_cols].agg(['sum', 'count', 'mean']).round(2)

hash_input = summary.to_string()

return hashlib.md5(hash_input.encode()).hexdigest()

# Compare hashes across systems

crm_hash = generate_consistency_hash(crm_data, ['month'], ['revenue'])

warehouse_hash = generate_consistency_hash(warehouse_data, ['month'], ['revenue'])

if crm_hash != warehouse_hash:

trigger_consistency_alert("Revenue aggregation mismatch detected")

Data contracts formalize consistency requirements:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

# Data contract specification

version: 1.0

dataset: customer_metrics

owner: [email protected]

schema:

- name: customer_id

type: string

constraints:

- unique

- not_null

- name: lifetime_value

type: decimal(10,2)

constraints:

- min: 0

business_meaning: "Total revenue from customer, USD"

guarantees:

- freshness: "< 24 hours"

- completeness: "> 99.5%"

- consistency: "matches CRM customer count - 1%"

The critical insight: consistency isn’t binary. It’s a spectrum measured in tolerances. Define acceptable variance ranges based on business impact, not technical perfection.

Pillar Four: Freshness - The Temporal Dimension

Freshness measures how current your data is relative to its expected update schedule. Stale data doesn’t just impact decision-making - it erodes confidence in your entire platform.

The challenge lies in distinguishing between acceptable delays and systemic failures. A morning batch job running 15 minutes late might be fine. The same job arriving 6 hours late definitely isn’t.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

-- Freshness monitoring query

WITH freshness_check AS (

SELECT

table_name,

MAX(updated_at) as last_update,

EXTRACT(EPOCH FROM (CURRENT_TIMESTAMP - MAX(updated_at))) / 3600 as hours_since_update,

expected_update_frequency_hours

FROM (

SELECT 'orders' as table_name, updated_at, 1 as expected_update_frequency_hours

FROM orders

UNION ALL

SELECT 'customers' as table_name, updated_at, 24 as expected_update_frequency_hours

FROM customers

) t

GROUP BY table_name, expected_update_frequency_hours

)

SELECT

table_name,

last_update,

hours_since_update,

expected_update_frequency_hours,

CASE

WHEN hours_since_update > expected_update_frequency_hours * 2 THEN 'CRITICAL'

WHEN hours_since_update > expected_update_frequency_hours * 1.5 THEN 'WARNING'

ELSE 'OK'

END as freshness_status

FROM freshness_check;

Airflow SLA monitoring provides operational awareness:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

from airflow.models import DAG

from airflow.operators.python_operator import PythonOperator

from datetime import datetime, timedelta

default_args = {

'owner': 'data-team',

'depends_on_past': False,

'start_date': datetime(2024, 1, 1),

'sla': timedelta(hours=2), # Alert if task takes > 2 hours

'retries': 2,

'retry_delay': timedelta(minutes=15)

}

dag = DAG(

'daily_orders_etl',

default_args=default_args,

description='Process daily order data',

schedule_interval='0 2 * * *', # Run at 2 AM daily

catchup=False

)

def check_data_freshness(**context):

# Custom freshness validation logic

execution_date = context['execution_date']

# Check if data arrived within expected window

pass

freshness_check = PythonOperator(

task_id='check_freshness',

python_callable=check_data_freshness,

dag=dag

)

Grafana dashboards provide visual freshness monitoring:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

# Grafana dashboard panel config

targets:

- expr: |

max(time() - data_last_update_timestamp{table=~"orders|customers"}) by (table) / 3600

legendFormat: "{{ table }}"

refId: A

thresholds:

- color: green

value: null

- color: yellow

value: 2

- color: red

value: 6

title: "Data Freshness (Hours Since Last Update)"

The key insight: freshness requirements cascade through your pipeline. If upstream data arrives 2 hours late, every downstream process inherits that delay unless you build buffering mechanisms.

Plan for temporal dependencies, not just logical ones.

Pillar Five: Traceability - The Detective’s Toolkit

Traceability answers the critical question: When something goes wrong, how quickly can you identify the root cause?

Every data anomaly tells a story. The challenge lies in having the instrumentation to read that story. Without proper lineage tracking, debugging becomes archaeology-layers of forgotten transformations and deprecated pipelines that nobody quite remembers.

Modern data lineage tools like OpenLineage integrate directly with your processing engines:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

# OpenLineage integration with Apache Spark

from openlineage.spark import SparkSession

spark = SparkSession.builder \

.appName("order_processing") \

.config("spark.openlineage.host", "http://lineage-server:5000") \

.getOrCreate()

# Lineage automatically tracked

orders_df = spark.read.parquet("s3://data-lake/orders/")

processed_df = orders_df.filter(col("status") == "completed") \

.groupBy("customer_id") \

.agg(sum("amount").alias("total_spent"))

processed_df.write.parquet("s3://data-warehouse/customer_totals/")

SQL parsing creates queryable lineage graphs:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

-- Lineage metadata table structure

CREATE TABLE data_lineage (

job_id VARCHAR(255),

run_id VARCHAR(255),

source_table VARCHAR(255),

target_table VARCHAR(255),

transformation_type VARCHAR(50),

created_at TIMESTAMP,

sql_query TEXT

);

-- Query to find upstream dependencies

WITH RECURSIVE lineage_tree AS (

SELECT source_table, target_table, 1 as depth

FROM data_lineage

WHERE target_table = 'customer_revenue_summary'

UNION ALL

SELECT l.source_table, l.target_table, lt.depth + 1

FROM data_lineage l

JOIN lineage_tree lt ON l.target_table = lt.source_table

WHERE lt.depth < 10 -- Prevent infinite recursion

)

SELECT DISTINCT source_table, depth

FROM lineage_tree

ORDER BY depth, source_table;

Advanced traceability includes change tracking:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

# Git-based transformation versioning

import git

import json

from datetime import datetime

def track_transformation_version(sql_file_path, execution_context):

repo = git.Repo(search_parent_directories=True)

current_commit = repo.head.commit.hexsha

metadata = {

"commit_hash": current_commit,

"file_path": sql_file_path,

"execution_time": datetime.utcnow().isoformat(),

"pipeline_version": execution_context.get("pipeline_version"),

"upstream_tables": execution_context.get("source_tables", []),

"downstream_tables": execution_context.get("target_tables", [])

}

# Store in lineage system

store_lineage_metadata(metadata)

The most sophisticated traceability systems automatically annotate anomalous records with their lineage chain:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

-- Anomaly detection with lineage context

WITH anomalies AS (

SELECT

customer_id,

revenue,

ABS(revenue - AVG(revenue) OVER()) / STDDEV(revenue) OVER() as z_score

FROM customer_revenue_daily

WHERE z_score > 3 -- Statistical outliers

),

enriched_anomalies AS (

SELECT

a.*,

l.source_table,

l.transformation_type,

l.job_id,

l.created_at as lineage_timestamp

FROM anomalies a

JOIN data_lineage l ON l.target_table = 'customer_revenue_daily'

)

SELECT * FROM enriched_anomalies

ORDER BY z_score DESC;

Here’s the insight most organizations miss: traceability isn’t just about debugging. It’s about building institutional memory. When team members leave, their tribal knowledge of data quirks and transformation logic leaves with them. Proper lineage documentation creates a searchable record of every decision and change.

The Integration Imperative

These five pillars don’t operate in isolation. They form an interconnected quality ecosystem where weakness in one dimension amplifies problems in others.

Consider a typical failure cascade:

- Completeness failure: Source system drops 5% of records due to API timeout

- Freshness impact: Batch job completes but with incomplete data

- Accuracy degradation: Averages and totals calculate correctly but on wrong dataset

- Consistency breakdown: Reports show different numbers because some use cached complete data, others use fresh incomplete data

- Traceability confusion: Without lineage tracking, debugging requires manual detective work across multiple systems

Smart data teams implement cascading alerts that acknowledge these interdependencies:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

# Multi-dimensional quality scoring

def calculate_quality_score(completeness_pct, accuracy_pct, consistency_pct,

freshness_hours, traceability_coverage):

# Weighted scoring based on business criticality

weights = {

'completeness': 0.3,

'accuracy': 0.25,

'consistency': 0.2,

'freshness': 0.15,

'traceability': 0.1

}

# Convert metrics to 0-100 scale

freshness_score = max(0, 100 - (freshness_hours * 10)) # Degrade by 10 per hour

score = (

completeness_pct * weights['completeness'] +

accuracy_pct * weights['accuracy'] +

consistency_pct * weights['consistency'] +

freshness_score * weights['freshness'] +

traceability_coverage * weights['traceability']

)

return min(100, max(0, score))

# Quality-based alerting

quality_score = calculate_quality_score(98.5, 99.2, 97.8, 2.5, 95.0)

if quality_score < 85:

send_critical_alert("Data quality degraded", quality_score)

elif quality_score < 95:

send_warning_alert("Data quality below threshold", quality_score)

Implementation Roadmap: From Theory to Practice

Understanding these principles intellectually differs vastly from implementing them operationally. Here’s a pragmatic rollout strategy that won’t overwhelm your existing infrastructure:

Week 1-2: Establish Completeness and Freshness Monitoring

Start with the fundamentals. These metrics are easiest to implement and provide immediate value:

1

2

3

4

5

6

7

8

9

10

11

-- Quick completeness check

CREATE VIEW daily_completeness_check AS

SELECT

'orders' as table_name,

DATE(created_at) as date,

COUNT(*) as record_count,

LAG(COUNT(*)) OVER (ORDER BY DATE(created_at)) as prev_day_count,

ABS(COUNT(*) - LAG(COUNT(*)) OVER (ORDER BY DATE(created_at))) as variance

FROM orders

GROUP BY DATE(created_at)

ORDER BY date DESC;

Week 3-4: Add Accuracy and Consistency Validation

Layer in business rule validation and cross-system checks:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

# dbt tests configuration

version: 2

models:

- name: orders

tests:

- unique:

column_name: order_id

- not_null:

column_name: customer_id

- accepted_values:

column_name: status

values: ['pending', 'confirmed', 'shipped', 'delivered', 'cancelled']

columns:

- name: order_amount

tests:

- dbt_utils.expression_is_true:

expression: ">= 0"

Week 5-6: Implement Basic Traceability

Begin tracking lineage for critical data flows:

1

2

3

4

5

6

7

8

9

10

11

12

# Simple lineage tracking

def log_transformation(source_tables, target_table, transformation_sql):

lineage_entry = {

"timestamp": datetime.utcnow(),

"source_tables": source_tables,

"target_table": target_table,

"transformation": transformation_sql,

"job_id": os.environ.get("AIRFLOW_RUN_ID", "manual")

}

# Log to your preferred system (database, S3, etc.)

write_lineage_log(lineage_entry)

Month 2: Integrate Quality Metrics into CI/CD

Embed quality checks directly into your deployment pipeline:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

# GitHub Actions workflow

name: Data Quality Checks

on:

push:

paths: ['dbt/**', 'sql/**']

jobs:

quality-tests:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- name: Run dbt tests

run: |

dbt test --profiles-dir profiles

dbt run-operation generate_quality_report

- name: Quality gate

run: |

python scripts/quality_gate.py --threshold 95

The Measurement Paradox

Here’s the uncomfortable truth that most data quality frameworks ignore: measurement changes behavior, often in unexpected ways.

When you start monitoring completeness, teams become hyper-focused on record counts while potentially neglecting data accuracy. When you measure freshness, pipelines might rush processing and introduce errors. When you track consistency, systems might artificially align numbers without addressing root causes.

This isn’t an argument against measurement—it’s a call for thoughtful implementation. Design your quality metrics to encourage the right behaviors:

- Reward early detection over perfect scores: A system that catches 90% of issues immediately beats one that finds 100% of issues three days later

- Balance competing objectives: Don’t let freshness requirements compromise accuracy standards

- Make quality metrics visible to data consumers: When business users understand quality scores, they make better decisions about when to trust or question reports

Beyond Monitoring: Building a Quality Culture

Technology provides the foundation, but culture determines whether quality initiatives succeed or become another dashboard that nobody checks.

The most effective data organizations treat quality metrics as leading indicators, not lagging measurements. They use these metrics to drive conversations about process improvement, not to assign blame after failures occur.

This requires reframing quality monitoring from “catching problems” to “enabling trust.” When a completeness check identifies missing data, the response shouldn’t be “fix the alert.” It should be “understand why our upstream system behavior changed and build resilience against similar failures.”

Quality metrics succeed when they become part of your organization’s daily vocabulary. When product managers ask about data freshness before making decisions. When analysts mention completeness scores in their reports. When executives reference quality trends in strategic discussions.

The Path Forward

Every data mismatch between reports represents a failure of trust, not just technology. These five quality pillars-completeness, accuracy, consistency, freshness, and traceability-provide the systematic framework needed to build and maintain that trust.

But remember: metrics are instruments, not destinations. They guide improvement efforts and provide early warning systems, but they don’t automatically solve underlying data challenges. The real work lies in using these measurements to drive systematic improvements in data architecture, process design, and organizational culture.

Start this week. Pick two metrics that address your most painful report mismatches. Implement basic monitoring. Set up alerts. Begin the conversations about acceptable tolerances and improvement priorities.

Your 3:47 AM debugging sessions will thank you.

Trust isn’t built through perfection-it’s constructed through measurement, transparency, and systematic improvement. The five pillars provide your blueprint. The rest is execution.

Ready to implement these quality metrics in your organization? Share your experience with data quality challenges and victories. The data community learns best when we’re honest about both our failures and our solutions.