The Physics of IFRS 17 Data Architecture

Master IFRS 17 data architecture with core engineering principles. Unpack event sourcing, bi-temporal models, and the physics of financial time-travel.

You can’t fix your IFRS 17 data architecture with better technology. You have to fix your organization first. We established that in a previous discussion, but for those of us in the trenches—the engineers and architects—the conversation eventually has to come back to code and design.

So, let’s talk about the hard science of building a data system for this notoriously complex accounting standard. These aren’t just best practices; they are principles as unavoidable as the laws of physics.

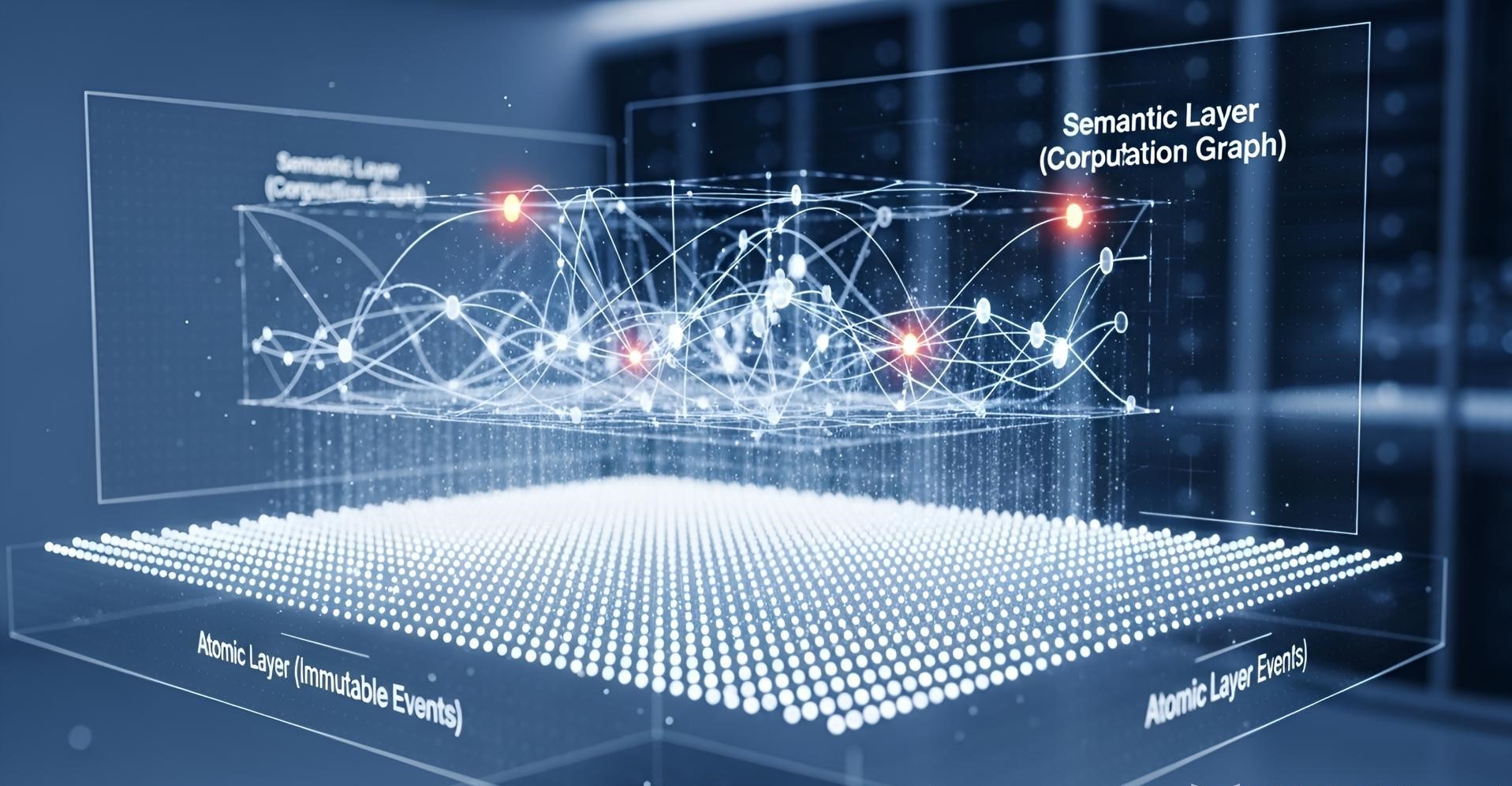

Why Two Layers Are Not a Choice, But a Necessity

The classic “atomic” and “semantic” data layers aren’t just a matter of design preference. They are a mathematical inevitability dictated by the core of IFRS 17.

Consider the recursive formula for the Contractual Service Margin (CSM):

CSM(t) = CSM(t-1) + InterestAccretion + NewBusiness - Amortization ± ChangeInEstimates

This simple-looking equation imposes two rigid requirements on your data system:

- Immutable historical facts: The state at time

t-1cannot change. - Mutable calculation logic: The logic for

ChangeInEstimateswill definitely change.

If you store facts and logic together, every change in actuarial assumptions would force you to rewrite history. That’s a non-starter. You can’t go back in time and change the market interest rate from last quarter just because your model changed today. The only sane solution is to separate the unchangeable past (the atomic layer) from the ever-changing calculations (the semantic layer).

The True Nature of an Atom

So what, precisely, is an “atom” in this atomic layer?

It’s not a policy. It’s not a transaction. It’s a state-change event.

1

2

3

4

5

6

7

Event = {

"timestamp": "2025-10-26T10:00:00Z", // Immutable

"entity_id": "POLICY-12345",

"event_type": "ENDORSEMENT_ISSUED",

"payload": { "coverage_change": "+10000" },

"source_system": "PolicyAdminSuite"

}

The critical insight here is that an atom isn’t the smallest unit of data; it’s the smallest unit of business change. Your atomic layer shouldn’t store the current state of a policy. It must store the full sequence of events that led to that state. This is Event Sourcing, tailored for the insurance industry.

The Computational Heart of the Semantic Layer

If the atomic layer is a ledger of events, the semantic layer is not just “another view of the data.” It is the materialization of a computation graph.

Every IFRS 17 concept is a node in this graph:

- Cohort (Group of Contracts): A function that aggregates atomic events based on grouping rules.

- Fulfillment Cash Flows: A function that discounts future cash flows using an interest rate curve.

- CSM: A recursive function that operates on the previous CSM and current period changes.

The real challenge is that these nodes are deeply interconnected. The structure is a Directed Acyclic Graph (DAG).

Architecting the semantic layer is really about designing how to efficiently compute, cache, and invalidate parts of this DAG.

How to Connect to Your Existing Data Platform

Your shiny new IFRS 17 system doesn’t live in a vacuum. It has to connect to your existing data landscape, and there are three basic topologies, each with its own physical constraints.

Model A: The Leech

This is the least invasive approach. But it suffers from accumulated latency, and data quality issues from upstream systems get amplified with each hop.

Model B: The Twin

Here, you create a dedicated, optimized path for IFRS 17. It’s faster and cleaner, but now you have a new problem: keeping the twin systems consistent.

Model C: The Big Bang

This is the purist’s choice: rebuild your core data platform around an event-sourced atomic layer that serves everyone. It offers a single source of truth but comes with enormous cost and risk.

Your choice depends on what we might call “data gravity”—a function of your data volume, the number of systems that depend on it, and the cost of changing them.

A Layered Strategy for Data Quality

“Data quality” isn’t a single concept. It means different things at different layers.

At the atomic layer, quality means completeness and immutability. You’re checking if the story makes sense. Did every policy event stream start with a CREATE event?

1

2

3

4

5

6

7

8

9

10

-- Check for orphaned event chains

SELECT policy_id

FROM events e1

WHERE e1.event_type != 'CREATE'

AND NOT EXISTS (

SELECT 1 FROM events e2

WHERE e2.policy_id = e1.policy_id

AND e2.event_type = 'CREATE'

AND e2.timestamp < e1.timestamp

);

At the semantic layer, quality means consistency and auditability. You’re checking if the math adds up. Does the CSM roll-forward actually balance?

1

2

3

4

5

6

# Check if the CSM roll-forward calculation is balanced

assert abs(

csm_closing_balance - (

csm_opening_balance + interest + new_business - amortization + adjustments

)

) < 0.01

The crucial distinction is this: the atomic layer chases truth, while the semantic layer chases correctness. Truth and correctness are not the same. An endorsement event may have truly happened (recorded in the atomic layer), but for IFRS 17 purposes, its financial impact may need to be retrospectively adjusted (handled in the semantic layer).

The Fundamental Challenge of Time

The deepest technical problem in IFRS 17 is managing multiple, conflicting timelines:

- Transaction Time: When the event actually happened (e.g., a policy was signed on Christmas Day).

- System Time: When the event was recorded in a system (e.g., entered on January 3rd).

- Reporting Time: When the event was included in a financial report (e.g., part of the January close on the 20th).

- Effective Time: When the event’s business impact begins (e.g., the policy is effective from January 1st).

Your atomic layer must capture all four. Your semantic layer must be able to reconstruct history from the perspective of any one of them. This is why a bi-temporal data model is non-negotiable.

1

2

3

4

5

6

7

8

9

10

CREATE TABLE atomic_events (

-- The business timeline

valid_from DATE,

valid_to DATE,

-- The system's record-keeping timeline

system_from TIMESTAMP,

system_to TIMESTAMP,

-- The event payload

event_data JSONB

);

The Trade-Off: Computation vs. Storage

The ultimate architectural decision is what to pre-compute and what to compute on the fly. This isn’t just about performance; it’s a trade-off between auditability and flexibility.

- Pre-compute more: Queries are fast, but auditing is a nightmare. The calculation logic is fossilized inside historical data.

- Compute on-the-fly: Auditing is easy because you can re-run any calculation for any point in time, but the performance overhead can be massive.

IFRS 17 is unique because regulators demand that you can prove a historical calculation was based on the assumptions known at that time. This leads to a counter-intuitive conclusion: the semantic layer shouldn’t store final results. It should store calculation snapshots.

1

2

3

4

5

6

7

8

9

10

11

12

class CalculationSnapshot:

def __init__(self, timestamp, assumptions, formula):

self.timestamp = timestamp

self.assumptions = assumptions # A dict of historical assumptions

self.formula = formula # The formula string used at the time

def recalculate(self, atomic_data):

# Re-run the historical calculation with historical inputs

return eval(self.formula, {

'data': atomic_data,

'assumptions': self.assumptions

})

An IFRS 17 Version of the CAP Theorem

Every distributed system faces the CAP Theorem—a choice between Consistency, Availability, and Partition Tolerance. IFRS 17 data platforms have their own impossible triangle, especially during the month-end close:

- Consistency: All systems (actuarial, finance, operations) see the same data at the same time.

- Availability: The systems must remain online and usable during the high-pressure closing period.

- Partition Tolerance: The actuarial, finance, and business systems can operate independently without bringing each other down.

During the reporting crunch, you have to sacrifice one. Most organizations choose to sacrifice Consistency. This is the technical root of all those late-night “manual journal entries” and post-close adjustments.

Returning to Engineering Reality

While there is no single “best practice,” a few principles are as close to physical laws as you can get:

- Event Sourcing: The atomic layer must be append-only. No updates.

- Bi-Temporality: You must separate business time from system time.

- DAG-Based Computation: The semantic layer’s dependencies must not have cycles.

- Idempotency: Every calculation must be repeatable and yield the same result.

- Snapshot Isolation: Historical calculations must use historical snapshots of logic and assumptions.

These aren’t suggestions. They are the requirements imposed by the mathematical structure of IFRS 17 itself.

Ultimately, the challenge of IFRS 17 data architecture is that you are being asked to build a time-traveling accounting system on top of a tech stack designed for simple create, read, update, and delete operations. This is less an architecture problem and more a physics problem: how do you maintain temporal reversibility in a system that is constantly moving forward?

Recommended Reading

This article is part of a series exploring the multi-layered challenges of IFRS 17 implementation. While this piece focuses on the technical physics of data architecture, the broader context involves organizational and structural challenges that must be addressed first:

“The Real Mess Behind Your IFRS 17 Data Architecture” - Before diving into technical solutions, understand why IFRS 17 projects struggle. This piece reveals that the core challenge isn’t technical—it’s the collision between your company’s unique history of tech debt and a rigid regulatory mandate. You can’t code your way out of a trust problem.

“IFRS17 Data Quality: A Layered Unraveling of an Architectural Predicament” - Data quality issues in IFRS 17 aren’t just technical problems—they’re symptoms of deeper architectural predicaments. This article explores how data governance becomes office politics and why the subledger system is often a bad translator between incompatible business languages.

Together, these three articles form a complete picture: from organizational challenges to architectural principles to the fundamental physics of building time-traveling financial systems. The technical solutions outlined in this article only work when built on the organizational foundation established in the previous discussions.